Visas for Investment: Urgent Measures Needed to Address the U.S.-Korea Rift

A shortage of skilled labor hinders U.S.

A shortage of skilled labor hinders U.S.

Put talent—not tariffs—at the center of the India–U.S. deal U.S.

AI scans simplify elections but risk bias Clear rules and provenance reduce errors With oversight, even losers can trust them

The largest election year ever recorded coincides with the most persuasive media technology in history. In 2024, around half of humanity—about 3.7 billion people—will be eligible to vote in more than 70 national elections. At the same time, a 2025 study in Nature Human Behaviour found that a leading large-language model was more persuasive than humans in debate-style exchanges 64% of the time, particularly when it tailored arguments using minimal personal data. Simply put, more people are voting while the cost of influence decreases and accelerates. An “AI-assisted politician scan” that summarizes candidates’ positions, records, and trade-offs seems inevitable because it significantly reduces the time required to understand politics. However, design choices—such as what gets summarized, how sources are weighted, and when the system refuses to respond—quietly become policy. The crucial question is not whether these tools will emerge, but whether we can design them fairly enough that even losing candidates would accept them.

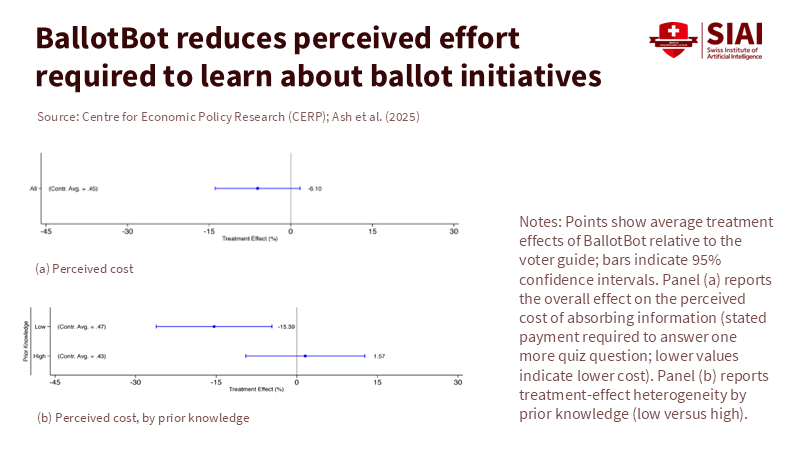

When voters confront many issues and candidates, any system that makes it easier to find, understand, and compare information will gain traction. Early evidence highlights this point. In a randomized study of California voters during the November 2024 ballot measures, a chatbot based on the official voter guide improved accuracy on detailed policy questions by 18% and reduced response time by about 10% compared to a standard digital guide. The presence of the chatbot also encouraged more information seeking; users were 73% more likely to explore additional propositions with its help. These are significant improvements as they directly address the obstacles that prevent people from reading long documents in the week leading up to a vote. However, the same study found no clear impact on voter turnout or direction of votes, and knowledge gains did not last without continued access—a reminder that convenience alone does not guarantee better outcomes.

The challenge lies in reliability. Independent tests during the 2024 U.S. primaries revealed that general-purpose chatbots answered more than half of basic election-administration questions incorrectly, with around 40% of responses deemed harmful or misleading. Usability research warns that when people search for information using generative tools, they may feel efficient but often overlook essential verification steps—creating an environment where plausible but incorrect answers can flourish. Survey data reflects public caution: by mid-2025, only about one-third of U.S. adults reported ever using a chatbot, and many expressed low trust in election information from these systems. In short, while AI question-answering can increase access, standard models are not yet reliable enough for essential civic information without strict controls.

Fairness adds another layer of concern. A politician scan may intend to be neutral, but how it ranks, summarizes, and refuses information can influence interpretation. We have seen similar cases before; even traditional search-engine ranking adjustments can sway undecided voters by significant margins in lab conditions, and repeated experiments confirm this effect is real. If a scan highlights one candidate’s actual votes while presenting another’s aspirational promises—or if it disproportionately represents media coverage favoring incumbents or “front-runners”—the tool gains agenda-setting power. The solution is not to eliminate summarization; rather, it is to recognize design as a public interest issue where equal exposure, source balance, and contestability are top priorities, rather than simply focusing on user interface aesthetics.

Three design decisions shape whether a scan serves as a voter aid or functions as an unseen referee: provenance, parity, and prudence. Provenance involves grounding information. Systems that quote and link to authoritative sources—such as legal texts, roll-call votes, audited budgets, and official manifestos—reduce the risk of incorrect information and are now standard practice in risk frameworks. The EU’s AI Act mandates clear labeling of AI-generated content and transparency for chatbots, while Spain has begun enforcing labeling with heavy fines. The Council of Europe’s 2024 AI treaty includes democratic safeguards that apply directly to election technologies. Together, these developments point to a clear minimum: scans should prioritize cited, official sources; display those sources inline; and indicate when the system is uncertain, rather than attempting to fill in gaps.

Parity focuses on balanced information exposure. Summaries should be created using matched templates for each candidate: the same fields in the same order, filled with consistent documentation levels. This means parallel sections for “Voting Record,” “Budgetary Impact,” “Independent Fact-Checks,” and “Conflicts/Controversies,” all based on sources of equal credibility. It also requires enforcing “balance by design” in ranking results. When a user requests a comparison, the scan should show each candidate's stance along with their evidentiary basis side-by-side, rather than listing one under another based on popularity bias. Conceptually, this approach treats the tool like a balanced clinical trial for information, with equal input, equal format, and equal outcome measures. Practically, this strategy reduces subtle amplification effects—similar to how minimizing biased rankings in search reduces preference shifts among undecided users.

Prudence pertains to the risks of persuasion and data usage. The current reality is that large language models can argue more effectively than individuals at scale, particularly with even minimal personalization. This means that targeted persuasion through a politician scan poses a real risk rather than a theoretical one. One potential solution is a “no-personalization” rule for political queries: the scan can adjust based on issues (showing more fiscal details to users focused on budgeting) but not by demographics or inferred voting intentions. Another solution is to implement an “abstain-when-uncertain” policy. Suppose the system cannot reference an official source or resolve discrepancies between sources. In that case, it should pause and direct the user to the proper authoritative page—like the election commission or parliament database—rather than guess. A third option is to log and review factual accuracy. Election officials or approved auditors should track aggregate metrics—percentage of answers with official citations, rate of abstentions, and correction rate after review—so the scan remains accountable over time rather than just during a pre-launch assessment.

What would lead a losing candidate to find the tool fair? A credible agreement with four main components: symmetrical inputs, visible provenance, contestable outputs, and independent oversight. Symmetrical inputs imply that every candidate's official documents are processed to the same depth and updated on the same schedule, with a public record that any campaign can verify. Visible provenance requires that every claim link back to a specific clause, vote, or budget line; where no official record exists, the scan should indicate that and refrain from speculating. Contestable outputs allow each campaign to formally challenge inaccuracies when the scan misstates a fact, ensuring timely corrections and a public change log. Independent oversight involves an election authority or accredited third party conducting continuous tests—using trick questions about registration deadlines, ballot drop boxes, or eligibility rules that have previously caused issues—and publicly reporting the success rate every month during the election period. This transforms “trust us” into “trust, but verify.”

None of this is effective without boundaries on persuasion and targeted messaging. A politician's scan should strictly provide information, not motivation. This entails no calls to action tailored to the user’s identity or inferred preferences, no creation of campaign slogans, and no ranking changes based on a visitor’s profile. If a user asks, “Which candidate should I choose if I value clean air and low taxes?” the scan should offer traceable trade-offs and historical voting records rather than suggest a preferred choice—especially because modern models can influence opinions even when labeled as “AI-generated.” Some regions are already adopting this perspective through transparency and anti-manipulation measures in the EU’s AI regulations, national efforts on labeling enforcement, and an emerging international treaty outlining AI duties in democratic settings. A responsible scan will operate within these guidelines by default, viewing them as foundational rather than as compliance tasks to be corrected later.

Finally, prudence involves acknowledging potential errors. Consider a national voter-information portal receiving one million scan queries in the fortnight before an election. If unrestricted chatbots fail on 50% of election-logistics questions in stress tests, but a grounded scan lowers that error rate to around 5% (a conservative benchmark based on the performance gap between general-purpose and specialized models), that still results in thousands of incorrect answers unless the system also avoids mistakes when uncertain, directs users to official resources for procedural questions, and quickly implements corrections. The key point is clear: scale magnifies small error rates into significant civic consequences. The only reliable solutions are constraints, pausing when unsure, and transparency, not just clever engagement strategies.

The initial facts will remain: billions of voters, and AI capable of out-arguing us when personalization is involved. The choice lies not between an AI-assisted politician scan and the current situation; it is between a carefully regulated tool and countless unaccountable summaries. The positive aspect is that we already understand the practical elements. Grounding in official sources enhances depth without apparent bias—consistency in templates and ranking curbs subtle influences. Pausing and ensuring provenance reduces errors. Regular testing and public metrics enable administrators to monitor quality in real time. When these elements become integrated into procurement and implementation, the scan shifts from being an unseen editor of democracy to a public service—one that even a losing candidate can agree to as a necessary step to clarify elections for busy citizens. The world's largest election cycle will not be the last; if we want the next one to be fairer, we should implement this agreement now and measure it as if our votes depend on it.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

Associated Press (2024). Chatbots’ inaccurate, misleading responses about U.S. elections threaten to keep voters from polls. April 16, 2024.

Council of Europe (2024). Framework Convention on Artificial Intelligence and human rights, democracy and the rule of law. Opened for signature Sept. 5, 2024.

Epstein, R., & Robertson, R. E. (2015). The search engine manipulation effect (SEME) and its possible impact on the outcomes of elections. Proceedings of the National Academy of Sciences.

European Parliament (2025). EU AI Act: first regulation on artificial intelligence (overview). Feb. 19, 2025.

Gallegos, I. O., Shani, C., Shi, W., et al. (2025). Labeling Messages as AI-Generated Does Not Reduce Their Persuasive Effects. arXiv preprint.

Nielsen Norman Group (2023). Information Foraging with Generative AI: A Study of 3,000+ Conversations. Sept. 24, 2023.

NIST (2024). Generative AI Profile: A companion to the AI Risk Management Framework (AI RMF 1.0). July 26, 2024.

Pew Research Center (2025). Artificial intelligence in daily life: Views and experiences. April 3, 2025.

Salvi, F., et al. (2025). On the conversational persuasiveness of GPT-4. Nature Human Behaviour. May 2025.

Stanford HAI (2024). Artificial Intelligence Index Report 2024.

UNDP (2024). A “super year” for elections: 3.7 billion voters in 72 countries. 2024.

VoxEU/CEPR—Ash, E., Galletta, S., & Opocher, G. (2025). BallotBot: Can AI Strengthen Democracy? CEPR Discussion Paper 20070; and working paper PDF.

Reuters (2025). Spain to impose massive fines for not labelling AI-generated content. March 11, 2025.

Robertson, R. E., et al. (2017). Suppressing the Search Engine Manipulation Effect (SEME). ACM Conference on Web Science.

Time (2023). Elections around the world in 2024. Dec. 28, 2023 (context on global electorate share).

Washington Post (2025). AI is more persuasive than a human in a debate, study finds. May 19, 2025.

Households’ inflation beliefs move more with media framing than ECB verbosity Extra, unscheduled talk can backfire; clarity, timing, and audiovisual formats anchor expectations Make communication a measurable policy tool with simple targets and state-contingent triggers

Sunday bans add about 1.4 miles of travel per trip They now mainly push shoppers online Targeted labor and digital-market policies work better

One key number shapes our understanding of Sunday trading rules: 1.4 miles.

Token value comes from network use, not only cash flows Teach Metcalfe-style metrics—active users, adjusted settlement, fees and ETF signals—with transparent filters Update curricula to pair demand-based valuation with risk and regulation

AI prices reflect scarce compute and network effects, not just hype Educators must teach market dynamics and govern AI use Turn volatility into lasting learning gains

In a time historically dominated by cash-flow models and neat multiples, one number stands out: by 2030, global data-center electricity use is expected to more than double to around 945 terawatt-hours. This represents Japan's annual consumption, with AI contributing to that increase. Regardless of our views on price-to-earnings ratios or “justified” valuations, the physical build-out is absolute. It involves steel, concrete, grid interconnects, substations, and heat rejection, all of which are financed rapidly by firms eager to meet demand. Today’s AI equity markets reflect more than just future cash flow forecasts. They are engaging in a live auction for scarce compute, power, and attention. Suppose education leaders continue to see the valuation debate as a mere financial exercise. In that case, we risk overlooking a critical point: the market is sending a clear signal about capabilities, bottlenecks, and network effects. Our goal is to prepare students and our institutions to understand hype intelligently, use it where it creates real options, and resist it where it deprives classrooms of the resources necessary for effective learning.

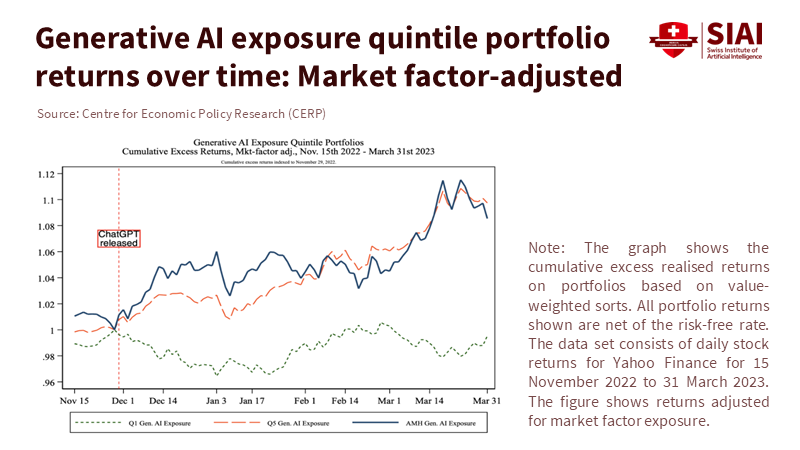

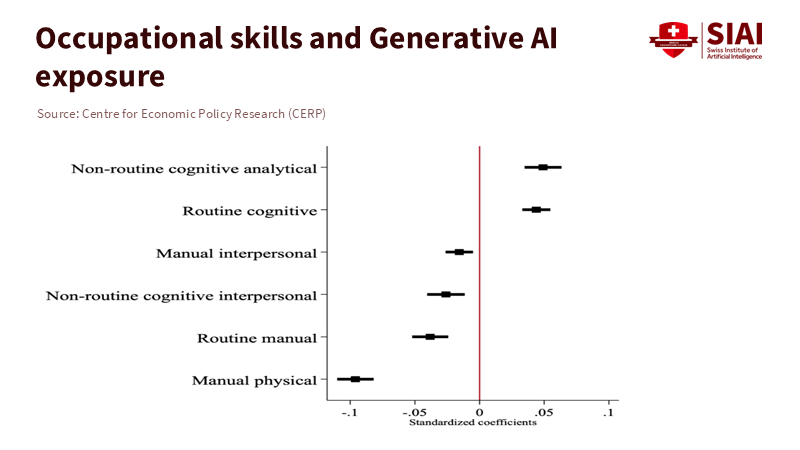

Traditional valuation maintains that price equals the discounted value of expected cash flows, adjusted for risk by a reasonable rate. This approach works well in stable markets but struggles when a technological shock changes constraints quickly than accounting can keep up. The current AI cycle is less about today’s profits and more about securing three interconnected scarcities—compute, energy, and high-quality data. Additionally, it is about capturing user attention, which transforms these inputs into platforms. In this environment, supply-and-demand dynamics take over: when capacity is limited and switching costs rise through ecosystems, prices surpass “intrinsic value.” This happens because having the option to participate tomorrow becomes valuable today. A now-well-known analysis found that companies with workforces more tied to generative AI saw significant excess returns in the two weeks following ChatGPT’s release. This pattern aligns with investors paying upfront for a quasi-option on future productivity gains, even before those gains appear in the income statements.

The term “hype” is not incorrect, but it is incomplete. Network economics shows that who is connected and how intensely they interact can create value only loosely associated with near-term cash generation. Metcalfe-style relationships—where network value grows non-linearly with users and interactions—have concrete relevance in crypto assets and platform contexts, even if they don’t directly translate to revenue. Applying that concept to AI, parameter counts and data pipelines matter less than the active use of those resources: the number of students, educators, and developers engaging with AI services. In valuation terms, this usage can act as an amplifier for future cash flows or as a real option that broadens the potential for profitable product lines. An education policy that views all this as irrational mispricing will misallocate scarce attention within institutions. The market is telling us which bottlenecks and complements truly matter.

The numbers provide a mixed but clear picture. On the optimistic side, NVIDIA's price-to-earnings ratio recently hovered around 50, high by historical standards and an easy target for bubble conversations. On the investment front, Alphabet raised its 2025 capital expenditure guidance from $75 billion to about $85 billion, and Meta now expects $66–72 billion in capex for 2025, directly linking this to AI data-center construction. Meanwhile, U.S. data-center construction reached a record annualized pace of $40 billion in June 2025. Both the IEA and U.S. EIA predict strong electricity growth in the mid-2020s, with AI-related computing playing a significant role. These figures represent concrete plans, not mere speculation. However, rigorous field data complicate the productivity narrative. An RCT by METR found that experienced developers using modern AI coding tools were 19% slower on actual tasks, suggesting that current tools can slow productivity in high-skill situations. Additionally, a report from the MIT Media Lab argues that 95% of enterprise Gen-AI projects have shown no measurable profit impact so far. This gap in adoption and transformation should prompt both investors and decision-makers to exercise caution.

If this seems contradictory, it arises from expecting one data point—stock multiples or a standout case study—to answer broader questions. A more honest interpretation is that we are witnessing the initial dynamics of platform formation. This includes substantial capital expenditures to ease compute and power constraints, widespread adoption of generative AI tools in firms and universities, and uneven, often delayed, translations into actual productivity and profits. McKinsey estimates that generative AI could eventually add $2.6–4.4 trillion annually across various use cases. Their 2025 survey shows that 78% of organizations use AI in at least one function, while generative AI usage exceeds 70%—high adoption figures alongside disappointing short-term return on investment metrics. In higher education, 57% of leaders now see AI as a “strategic priority,” yet fewer than 40% of institutions report having mature acceptable-use policies. This points to a sector that is moving faster to deploy tools than to govern their use. The correct takeaway for educators is not “this is a bubble, stand down,” but instead “we are in a market for expectations where the main limit is our capacity to turn tools into outcomes.”

The first step is to teach the real economics we are experiencing. Business, public policy, and data science curricula should move beyond the mechanics of discounted cash flow to cover network economics, market microstructure in times of scarcity, and real options for intangible assets, such as data. Students should learn how an increase in GPU supply or a new substation interconnect can alter market power and pricing, even far from the classroom. They should also understand how platform lock-in and ecosystems affect not only company strategies but also public goods. Methodologically, programs should incorporate brief “method notes” into coursework and capstone projects. This approach forces students to make straightforward, rough estimates, such as how a campus model serving 10,000 users changes per-user costs with latency constraints. This literacy shifts the discussion from “bubble versus fundamentals” to a dynamic challenge of relieving bottlenecks and assessing option value under uncertainty rather than a static P/E ratio argument.

Second, administrators should view AI spending as a collection of real options instead of a single entity. Centralized, vendor-restricted tool purchases may promise speed but can also lead to stranded costs if teaching methods don’t adjust. In contrast, smaller, domain-specific trials might have higher individual costs but provide valuable insights into where AI enhances human expertise and where it replaces it. The MIT NANDA finding of minimal profit impact is not a reason to stop; it’s a reason to phase in slowly: begin where workflows are established, evaluation is precise, and equity risks are manageable. Focus on academic advising, formative feedback, scheduling, and back-office automation before high-stakes assessments or admissions. Create dashboards that track business and learning outcomes, not just model tokens or prompt counts, and subject them to the same auditing standards we apply to research compliance. The clear takeaway is this: adoption is straightforward; integration is tough. Governance is what differentiates a cost center from a powerful capability.

Third, approach computing and energy as essential infrastructure for learning, ensuring sustainability is included from the start. The IEA’s forecast for data-center electricity demands doubling by 2030 means that campuses entering AI at scale need to plan for power, cooling, and eco-friendly procurement—or risk unstable dependencies and public backlash. Collaborative models can help share fixed costs. Regional university alliances can negotiate access to cloud credits, co-locate small inference clusters with local energy plants, or enter power-purchase agreements that prioritize green energy. Where possible, select open standards and interoperability to minimize switching costs and enhance negotiating power with vendors. Connect every infrastructure dollar to outcomes for students—whether through course redesigns that demonstrate improvements in persistence, integrating AI support into writing curricula, or providing accessible tutoring aimed at reducing equity gaps. Infrastructure without a pedagogical purpose is just an acquisition of assets.

Fourth, adjust governance to align with how capabilities actually spread. EDUCAUSE’s 2025 study shows that enthusiasm is outpacing policy depth. This can lead to inconsistent practices and reputational risks. Institutions should publish clear, up-to-date use policies that outline permitted tasks, data-handling rules, attribution norms, and escalation procedures for issues. Pair these with revised assessments—more oral presentations, in-class synthesis, and comprehensive portfolios—to decouple grading from text generation and clarify AI’s role. A parallel track for faculty development should focus on low-risk enhancements, including the use of AI for large-scale feedback, formative analytics, and expanding access to research-grade tools. The aim is not to automate teachers but to increase human interaction in areas where judgment and compassion enhance learning.

Fifth, resist the comfort of simplistic narratives. Some financial analyses argue that technology valuations remain reasonable once growth factors are considered, while others caution against painful corrections. Both viewpoints can hold some truth. For universities, the critical inquiry is about exposure management: which investments generate option value in both scenarios? Promoting valuation literacy across disciplines, funding course redesigns that focus on AI-driven problem-solving, establishing computational “commons” to reduce experimentation costs, and enhancing institutional research capabilities to measure learning effects each serve to hedge against both optimistic and pessimistic market scenarios. In market terms, this represents a balanced strategy: low-risk, high-utility additions at scale, alongside a select few high-risk pilots with clear stopping criteria.

A final note on “irrationality.” The most straightforward critique of AI valuations is that many projects do not succeed, and productivity varies. Both of these assertions are true in the short term. However, markets can rationally account for path dependence: once a few platforms gather developers, data, and distribution, the adoption curve’s steepness and the cost of ousting existing players change. This observation does not certify every valuation; instead, it explains why price can exceed cash flows during times of relaxed constraints. The industry’s mistake would be to dismiss this signal on principle or, worse, to replicate it uncritically with high-profile but low-impact spending. The desired approach is not just skepticism but disciplined opportunism: the practice of turning fluctuating expectations into lasting learning capabilities.

Returning to the key fact: electricity demand for data centers is set to double this decade, with AI as the driving force. We can discuss whether current equity prices exceed “intrinsic value.” What we cannot ignore is that the investments they fund create the capacity—and dependencies—that will influence our students’ careers. Education policy must stop regarding valuation as a moral debate and start interpreting it as market data. We should educate students on how supply constraints, network effects, and option value affect prices, govern institutional adoption to ensure pilots evolve into workflows, and invest in sustainable compute so that pedagogy—not publicity—shapes our AI impact. By doing so, we will transform a noisy, hype-filled cycle into a quieter form of progress: more time focused on essential tasks for teachers and students, greater access to quality feedback, and graduates who can distinguish between future narratives and a system that actively builds them. This is a call to action that fits the moment and is the best safeguard against whatever the market decides next.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

Atlantic, The. (2025). Just How Bad Would an AI Bubble Be? Retrieved September 2025.

CEPR VoxEU. Eisfeldt, A. L., Schubert, G., & Zhang, M. B. (2023). Generative AI and firm valuation (VoxEU column).

EDUCAUSE. (2025). 2025 EDUCAUSE AI Landscape Study: Introduction and Key Findings.

EdScoop. (2025). Higher education is warming up to AI, new survey shows. (Reporting 57% of leaders view AI as a strategic priority.)

International Energy Agency (IEA). (2025). AI is set to drive surging electricity demand from data centres… (News release and Energy & AI report).

Macrotrends. (2025). NVIDIA PE Ratio (NVDA).

McKinsey & Company. (2023). The economic potential of generative AI: The next productivity frontier.

McKinsey & Company (QuantumBlack). (2025). The State of AI: How organizations are rewiring to capture value (survey report).

METR (Model Evaluation & Threat Research). Becker, J., et al. (2025). Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity (arXiv preprint & summary).

MIT Media Lab, Project NANDA. (2025). The GenAI Divide: State of AI in Business 2025 (preliminary report) and Fortune coverage.

Reuters. (2025). Alphabet raises 2025 capital spending target to about $85 billion; U.S. data centre build hits record as AI demand surges.

U.S. Department of Energy / EPRI. (2024–2025). Data centre energy use outlooks and U.S. demand trends (DOE LBNL report; DOE/EPRI brief).

The Department of Energy's Energy.gov

U.S. Energy Information Administration (EIA). (2025). Short-Term Energy Outlook; Today in Energy: Electricity consumption to reach record highs.

UBS Global Wealth Management. (2025). Are we facing an AI bubble? (market note).

Meta Platforms, Investor Relations. (2025). Q1 and Q2 2025 results; 2025 capex guidance $66–72B.

Alphabet (Google) Investor Relations. (2025). Q1 & Q2 2025 earnings calls noting capex levels.

Bakhtiar, T. (2023). Network effects and store-of-value features in the cryptocurrency market (empirical evidence on Metcalfe-type relationships).

Reshoring works only when automation slashes unit labor costs Raise robot density and software-driven productivity, not tariffs Tie incentives to verified plant gains and workforce upskilling

One key point that should ch

U.S. tariffs mix security aims with bargaining, causing confusion. Allies hedge by shifting trade and investment toward China and cheaper energy, blunting U.S.

The UK–India pact swaps targeted tariff cuts for larger services and mobility gains Phased quotas protect adjustment while amplifying each side’s comparative strengths Biggest risk: an EU–India deal; move fast and fund skills to preserve advantage