Post hoc, ergo propter hoc – impossible challenges in finding causality in data science

Data Science can find correlation but not causality

In stat, no causal but high correlation is called 'Spurious regression'

Hallucinations in LLMs are repsentative examples of spurious correlation

Imagine two twin kids living in the neighborhood. One prefers to play outside day and night, while the other mostly sticks to his video games. After a year later, doctors find that the gamer boy is much healthier, thus conclude that playing outside is bad for growing children’s health.

What do you think of the conclusion? Do you agree with the conclusion?

Even without much scientific training, we can almost immediately dismiss the conclusion that is based on lop-sided logic and possibly driven by insufficient information of the neighborhood. For example, if the neighborhood is as radioactively contained as Chernobyl or Fukushima, playing outside can undoubtedly be as close as committing a suicide. What about more nutrition provided to the gamer boy due to easier access to home food? The gamer body just had to drop the game console for 5 seconds to eat something, but his twin had to walk or run for 5 minites to come back home for food.

In fact, there are infinitely many potential variables that may have affected two twin kids’ condition. Just by the collected data set above, the best we can tell is that for an unknown reason, the gamer boy is medically healthier than the other twin.

In more scientific terms, it can be said that statistics has been known for correlations but not for causality. Even in a controlled environment, it is hard to argue that the control variable was the cause of the effect. Researchers only ‘guess’ that the correlation means causality.

Post Hoc, Ergo Propter Hoc

There is a famous Latin phrase meaning “after this, therefore on account of it”. In plain English, it means that one event is the cause of the other event occuring right next. You do not need rocket science to counterargue that two random events are interconnected just because one occured right after another. This is a widely common logical mistake that assigns causality just by an order of events.

In statistics, it is often called that ‘Correlation does not necessarily guarantee causality’. In the same context, such a regression is called ‘Spurious regression’, which has been widely reported in engineers’ adaptation of data science.

One noticeable example is ‘Hallucination’ cases in ChatGPT. The LLM only finds higher correlation between two words, two sentences, and two body of texts (or images in these days), but it fails to discern the causal relation embedded in the two data sets.

Statistians have long been working on to differentiate the causal cases from high correlation, but the best so far we have is ‘Granger causallity’, which only helps us to find no causality case between 3 variables. Granger causality offers a philophical frame that can help us to test if the 3rd variable can be a potential cause of the hidden causality. The academic countribution by Professor Granger’s research to be Nobel Prize awarded is because it proved that it is mechanically (or philosophically) impossible to verify a causal relationship just by correlation.

Why AI ultimately needs human approval?

The Post Hoc Fallacy, by nature of current AI models, is an unavoidable huddle that all data scientists have to suffer from. Unlike simple regression based researches, the LLMs rely on too large chunk of data that it is practically impossible to tackle every connection of two text bodies.

This is where human approval is required, unless the data scientists decide to finetune the LLM in a way to offer only the highest probable (thus causal) matches. The more likely the matches are, the less likely there will be spurious connection between two sets of information, assuming that the underlying data is sourced from accurate providers.

Teaching AI/Data science, I surprisingly often come across a number of ‘fake experts’ whose only understanding of AI is a bunch of terminology from newspapers, or a few lines of media articles at best, without any in-depth training in basic academic tools, math and stat. When I raise Grange causality as my counterargument for impossibility to distinguish from correlation to causality by statistical methods alone (by far philosophically impossible), many of them ask, “Then, wouldn’t it be possible with AI?”

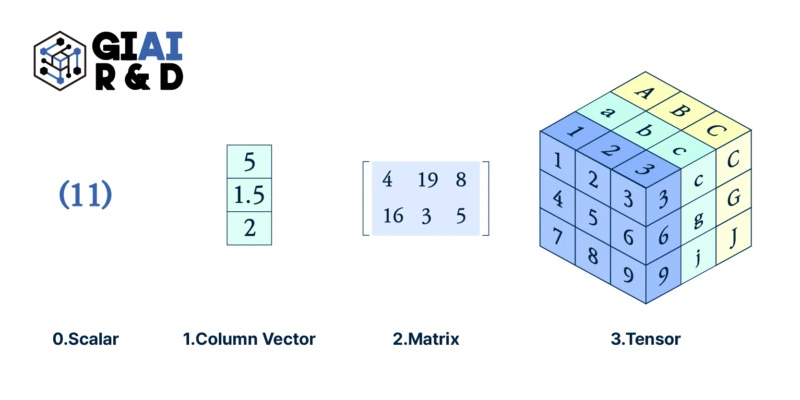

If the ‘fake experts’ had some elementary level math and stat training from undergrad, I believe they should be able to understand that computational science (academic name of AI) is just a computer version of statistics. AI is actually nothing more than the task of performing statistics more quickly and effectively using computer calculations. In other words, AI is a sub-field of statistics. Their questions can be framed like

- If it is impossible with statistics, wouldn’t it be possible with statistics calculated by computers?

- If it is impossible with elementary arithmetic, wouldn’t it be possible with addition and subtraction?

The inability of statistics to make causal inferences is the same as saying that it is impossible to mechanically eliminate hallucinations in ChatGPT. Those with academic training in the fields social sciences, the disciplines of which collect potentially correlated variables and use human experience as the final step to conclude causal relationships, see that ChatGPT is built to mimic cognitive behavior at the shamefully shallow level. The fact that ChatGPT depends on ‘Human Feedback’ in its custom version of ‘Reinforcement Learning’ is the very example of the basic cognitive behavior. The reason that we still cannot call it ‘AI’ is because there is no automatic rule for the cheap copy to remove the Post Hoc Fallacy, just like Clive Granger proved in his work for Nobel Prize.

Causal inference is not monotonically increasing challenge, but multi-dimensional problem

In natural science and engineering, where all conditions are limited and controlled in the lab (or by a machine), I often see cases where they see human correction as unscientific. Is human intervention really unscientific? Well, Heidelberg’s indeterminacy principle states that when a human applies a stimulus to observe a microscopic phenomenon, the position and state just before applying the stimulus can be known, but the position after the stimulus can only be guessed. If no stimulation is applied at all, the current location and condition cannot be fully identified. In the end, human intervention is needed to earn at least partial information. Withou it, one can never have any scientifically proven information.

Computational science is not much different. In order to rule out hallucinations, researchers either have to change data sets or re-parameter the model. The new model may be closer to perfection for that particular purpose, but the modification may surface hidden or unknown problems. The vector space spanned by the body of data set is too large and too multidimensional that there is no guarantee that one modification will always monotonically increase the perfection in every angle.

What is more concerning is that the data set is clean, unless you are dealing with low noise (or zero noise) ones like grammatically correct texts and quality images. Once researchers step aside from natural language and image recognition, data sets are exposed to infinitely many sources of unknown noises. Such high noise data often have measurement error problems. Sometimes researchers are unable to collect important variables. These are called ‘endongeneity’, and social scientists have spent nearly a century to extract at least partial information from the faulty data.

Social scientists have modified statistics in their own way that complements ‘endogeneity’. Econometrics is a representative example, using the concept of instrumental variables to eliminate problems such as errors in variable measurement, omission of measured variables, and two-way influence between explanatory variables and dependent variables. These studies are coined ‘Average Treatment Effect’ and ‘Local Average Treatment Effect’ that were awarded the Nobel Prize in 2021. It’s not completely correct, but it’s part of the challenge to find a little less wrong.

Some untrained engineers claim magic with AI

Here at GIAI, many of us share our frustrations with untrained engineers confused AI as a marketing term for limited automatization with real self-evolving ‘intelligence’. The silly claiming that one can find causality from correlation is not that different. The fact that they claim such spoofing arguments already proves that they are unaware of Granger’s causality or any philosophically robust proposition to connect/disconnect causality and correlation, thus proves that they lack scientific training to handle statistical tools. Given that current version of AI is no better than pattern matching for higher frequency, it is no doubt that scientifically untrained data scientists are not entitled to be called data scientists.

Let me share one bizarre case that I heard from a colleague here at GIAI from his country. In case anyone feel that the following example is a little insulting, a half of his jokes are about his country’s inable data scientists. In one of the tech companies in his country, a data scientist was given to differentiate a handful of causal events from a bunch of correlation cases. The guy said “I asked ChatGPT, but it seems there were limitations because my GPT version is 3.5. I should be able to get a better answer if I use 4.0.”

The guy not only is unaware of the post hoc fallacy in data science, but he also highly likely does not even understand that ChatGPT is no more than a correlation machine for texts and images by given prompts. This is not something you can learn from job. This is something you should learn from school, which is precisely why many Asian engineers are driven to the misconception that AI is magic. It has been known that Asian engineering programs generally focus less on mathematical backup, unlike renowned western universities.

In fact, it is not his country alone. The crowding out effect is heavy as you go to more engineer driven conferences and less sophisticated countries / companies. Despite the shocking inability, given the market hype for Generative AI, I guess those guys are paid high. Whenever I come across mockeries like the untrained engineers and buffoonish conferences, I just laugh it off and shake it off. But, when it comes to businesses, I cannot help ask myself if they worth the money.