Why Companies cannot keep the top-tier data scientists / Research Scientists?

Top brains in AI/Data Science are driven to challenging jobs like modeling

Seldom a 2nd-tier company, with countless malpractices, can meet the expectations

Even with $$$, still they soon are forced out of AI game

A few years ago, a large Asian conglomerate acquired a Silicon Valley’s start-up just off an early Series A funding. Let’s say it is start-up $\alpha$. The M&A team leader later told me that the acquisition was mostly to hire the data scientist in the early stage start-up, but the guy left $\alpha$ on the day the M&A deal was announced.

I had an occation to sit down with the data scientist a few months later, and asked him why. He tried to avoide the conversation, but it was clear that the changing circumstances definitely were not within his expectation. Unlike other bunch of junior data scientists in Silicon Valley’s large firms, he did signal me his grad school training in math and stat that I had a pleasant half an hour talk about models. He was mal-treated in large firms that he was given to run SQL queries and build Tableau-based graphes, like other juniors. His PhD training was useless in large firms, so he had decided to be a founding member of $\alpha$ that he can build models and test them with live data. The Asian acquirer with bureaucratic HR system wanted him to give up his agenda and to transplant the Silicon Valley large firm’s junior data scientist training system to the acquirer firm.

Brains go for brains

Given tons of other available positions, he didn’t waste his time. Personall,y I also have lost some months of my life for mere SQL queries and fancy graphes. Well, some people may still go for ‘data scientist’ title, but I am my own man. So was the data scientist from $\alpha$.

These days, Silicon Valley firms call the modelers as ‘research scientists’, or simliar names. There also are positions called ‘machine learning engineers’ whose jobs somewhat related to ‘research scientists’, but may disinclude mathematical modeling parts and way more software engineering in it. The title ‘Data Scientists’ are now given to jobs that were used to be called ‘SQL monkeys’. As the old nickname suggests, not that many trained scientists would love to do the job, even with competitive salary package.

What companies have to understand is that we, research scientists, are not trained for SQL and Tableau, but mathematical modeling. It’s like a hard-trained sushi cook(将太の寿司, shota no sushi) is given to make street food like Chinese noodle.

Let me give you an example in real corporate world. Let’s say a semi-conductor company, $\beta$ wants to build a test model for a wafer / subsctrate. What I often hear from those companeis are that they build a CNN model that reads the wafer’s image and match it with pre-labeled 0/1 for error detection. In fact, similar practices have been widely adapted practice among all Neural Network maniacs. I am not saying it does not work. It works. But then, what would you do, if the pre-label was done poorly? Say, the 0/1 entries were like over 10,000 and hardly any body double checked the accruracy. Can you rely on that CNN-based model? In addition to that, the model probably require enourmous amount of computational costs to build, let alone test and operating it daily.

Wrong practice that drives out brain

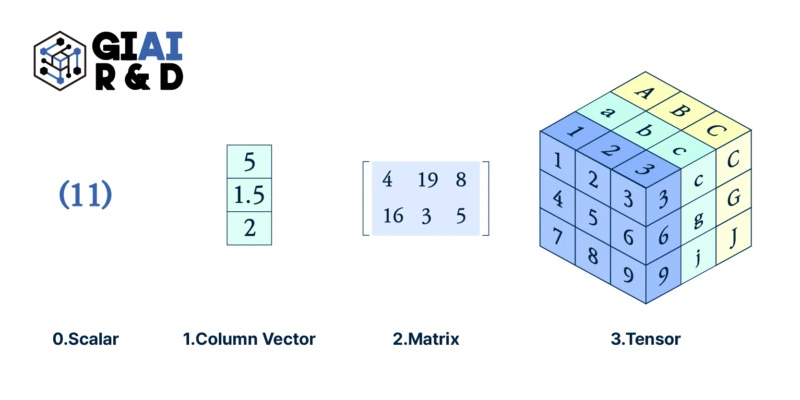

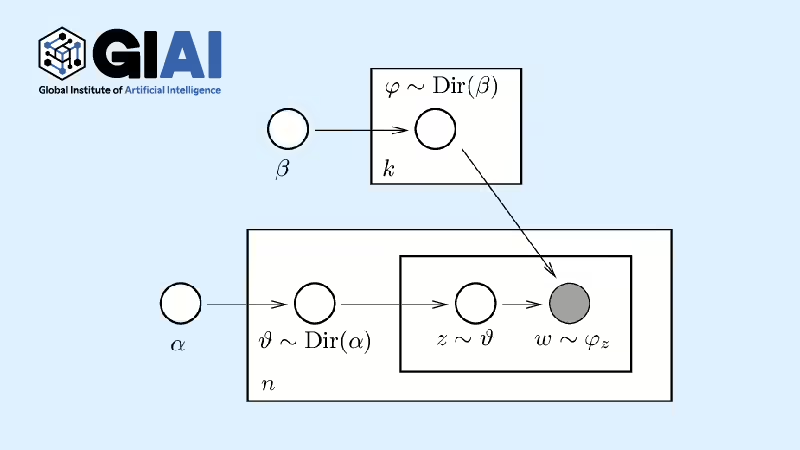

Instead of the costly and less scientific option, we can always build a model that captures data’s generated process(DGP). The wafer is composed of $n \times k$ entries, and issues emerge when $n \times 1$ or $1 \times k$ entries go wrong altogether. Given the domain knowledge, one can build a model with cross-products between entries in the same row/column. If it is continuously 1 (assume 1 for error), then it can easily be identified as a defect case.

Cost of building a model like that? It just needs your brain. There is a good chance that you don’t even need a dedicated graphics card for that calculation. Maintenance costs are also incomparably smaller than the CNN version. The concept of computational cst is something that you were supposed to learn in any scientific programming classes at school.

For companies sticking to the expensive CNN options, I always can spot followings:

- The management has little to no sense of ‘computational cost’

- The manaement cannnot discern ‘research scientists’ and ‘machine learning engineers’

- The company is full of engineers without the sense of mathematical modeling

If you want to grow up as a ‘research scientist’, just like the guy at $\alpha$, then run. If you are smart enough, you must have already run, like the guy at $\alpha$. After all, this is why many 2nd-tier firms end up with CNN maniacs like $\beta$. Most 2nd-tier firms are unlucky that they cannot keep research scientists due to lack of knowledge and experience. Those companies have to spend years of time and millions of wasted dollars to find that they were so long. By the time that they come to senses, it is mostly already way too late. If you are good enough, don’t waste your time on a sinking ship. The management needs so-called cold-turkey type shock treatment as a solution. In fact, there was a start-up that I stayed only for a week, which lost at least one data scientist in everyweek. The company went to bankrupt in 2 years.

What to do and not to do

At SIAI, I place Scientific Programming right after elementary math/stat training. Students see that each calculation method is an invention to overcome earlier available options’ limitations but simultanesouly the modification bounds the new tactic in another directions. Neural Networks are just one of the many kinds. Even with the eye-opening experience, some students still remain NN maniacs, and they flunk in Machine Learning and Deep Learning classes. Those students believe that there must exist a grand model that is univerally superior to all other models. I wish the world is that simple, but my ML and DL courses break the very belief. Those who are awaken, usually become excellent data/research scientists. Many of them come back to me that they were able to minimize computational costs by 90% just by replacing blindly implemented Neural Network models.

Once they see that dramatic cost reduction, at least some people understand that the earlier practice was wrong. The smarty student may not be happy to suffer from poor management and NN maniacs for long. Just like the guy at $\alpha$, it is always easier to change your job than fighting to change your incapable management. Managers moving fast maybe able to withhold the smarty. If not, you are just like the $\beta$. You invest a big chunk of money for an M&A just to hire a smarty, but the smarty disappears.

So, if you want to keep the smarty? Your solution is dead simple. Test math/stat training levels in scientific programming. You will save tons of $$$ in graphic card purchase.