Following AI hype vs. Studying AI/Data Science

People following AI hype are mostly completely misinformed

AI/Data Science is still limited to statistical methods

Hype can only attract ignorance

As a professor of AI/Data Science, I from time to time receive emails from a bunch of hyped followers claiming what they call ‘recent AI’ can solve things that I have been pessimistic. They usually think ‘recent AI’ is close to ‘Artificial General Intelligence’, which means the program learns by itself and it is beyond human intelligence level.

At the early stage of my start-up business, I answered them with quality contents. Soon, I realized that they just want to hear what they want to hear, and criticize people saying what they don’t want to hear. Last week, I came across a Scientific American‘s article about history of automations that were actually people. (Is There a Human Hiding behind That Robot or AI? A Brief History of Automatons That Were Actually People | Scientific American)

AI hype followers’ ungrounded dream for AGI

No doubt that many current AI tools are far more advanced than medieval ‘machines’ that were discussed in the Scientific American article, but human generated AI tools are still limited to pattern finding and abstracting it by featuring common parts. The process requires to implement a logic, be it human found or human’s programmed code found, and unfortunately the machine codes that we rely on is still limited to statistical approaches.

AI hype followers claim that recent AI tools have already overcome needs for human intervention. The truth is, even Amazon’s AI checkout that they claimed no human casher is needed is founded to be under large number of human inspectors, according to the aforementioned Scientific American article.

As far as I know, 9 out 10, in fact 99 out of 100 research papers in second tier (or below) AI academic journals are full of re-generation of a few leading papers on different data sets with only a minor change.

The leading papers in AI, like all other fields, change computational methodologies for a fit to new set of data and different purposes, but the technique is unique and it helps a lot of unsolved issues. Going down to second tier or below, it is just a regeneration, so top class researchers usually don’t waste time on them. The problem is that even the top journals are not open only for ground breaking papers. There are not that many ground breaking papers, by definition. We mostly just go up one by one, which is already ultra painful.

Going back to my graduate studies, I tried to establish a model for high speed of information flow among financial investors that leads them to follow each other and copy the winning model, which results in financial market overshooting (both hype/crash) at an accelerated speed. The process of information sharing that results in suboptimal market equilibrium is called ‘Hirshleifer effect’. Modeling that idea into an equation that fits to a variety of cases is a demanding task. Every researcher has one’s own opinion, because they need to solve different problems and they have different backgrounds. Unlikely we will end up with one common form for the effect. This is how the science field works.

Hype that attracts ignorance

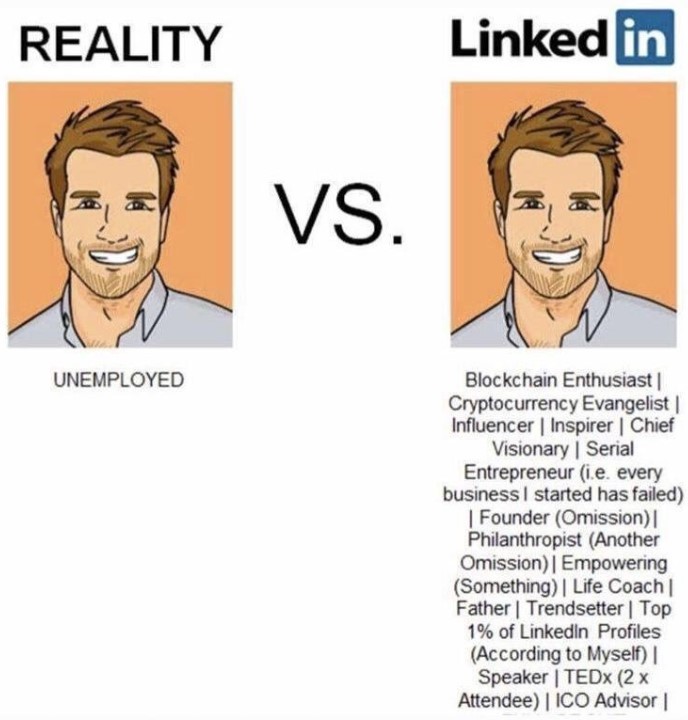

People outside of research, people in marketing to raise AI hype, and people unable to understand researches but can understand marketers’ catchphrases are those people who frustrate us. As mentioned earlier, I did try to persuade them that it is only a hype and the reality is far from the catch lines. I have given up doing so for many years.

Friends of mine who have not pursued grad school sometimes claim that they just need to test the AI model. For example, if an AI engineer claims that his/her AI can win against wall street’s top-class fund managers by double to tripple margin, my friends think all they need as a venture capitalist is to test it for a certain period of time.

The AI engineer may not be smart enough to show you failed result. But a series of failed funding attempts will make him smarter. From a certain point, I am sure the AI engineer begins showing off successful test cases only, from the limited time span. My VC friends will likely be fooled, because there is not such an algorithm that can win against market consistently. If I had that model, I would not go for VC funding. I would set up a hedge-fund or I will just trade with my own money. If I know that I can win with 100% probability and zero risk, why share profit with somebody else?

The hype disappears not by a few failed tests, but by no budget in marketing

Since many ignorant VCs are fooled, the hype continues. Once the funding is secured, the AI engineer runs more marketing tools to show off so that potential investors are brain-washed by the artificial success story.

As the test failed multiple times, the actual investments with fund buyers’ money also fails. Clients begin complaining, but the hype is still high and the VC’s funding is not dry yet. In addition to that, now the VC is desperate to raise the invested AI start-up’s value. He/She also lies. The VC maybe uninformed of the failed tests, but it is unlikely that he/she hears complains from angry clients. The VC’s lies, however unintentional, support the hype. The hype goes on. Until when?

The hype becomes invisible when people stop talking about. When people stop talk about it? If the product is not new anymore? Well, maybe. But for AI products, if it has no real use cases, then people finally understand that it was all marketing hype. The less clients, and the less words of mouth. To pump up dying hype, the company may put in more budget to marketing. They do so, until it completely runs out of cash. At some point, there is no ad, so people just move onto something else. Finally, the hype is gone.

Then, AI hype followers no longer send me emails with disgusting and silly criticism.

Following AI hype vs. Studying AI/Data Science

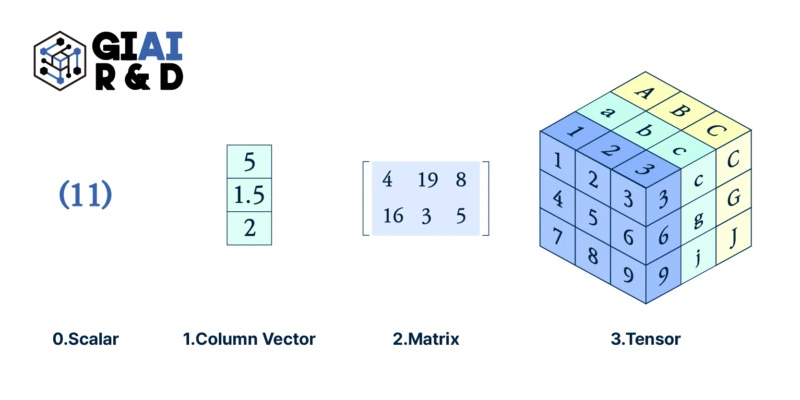

On the contrary, there are some people determined to study this subject in-depth. They soon realize that copying a few lines of program codes on Github.com does not make them experts. They may read a few ‘tech blogs’ and textbooks, but the smarter they are, the faster they catch that it requires loads of mathematics, statistics, and hell more scientific backgrounds that they have not studied from college.

They begin looking for education programs. For the last 7~8 years, a growing number of universities have created AI/Data Science programs. At the very beginning, many programs were focused too much on computer programming, but by the competition of coding boot-camps and accreditational institutions’ drive, most AI/Data Science programs in US top research schools (or similar level schools in the world) offer mathematically heavy courses.

Unfortunately, many students fail, because math and stat required to professional data scientists is not just copying a few lines of program codes from Github.com. My institution, for example, runs Bachelor level courses for AI MBA and MSc AI/Data Science for more qualified students. Most students know the MSc is superior to AI MBA, but only few can survice. They can’t even understand AI MBA‘s courses that are par to undergrad. Considering US top schools’ failing rates in STEM majors, I don’t think it is a surprise.

Those failing students are still better than AI hype followers, so highly unlikely be fooled like my ignorant VC friends, but they are unfortunately not good enough to earn a demaing STEM degree. I am sorry to see them walk away from the school without a degree, but the school is not a diploma mill.

The distance from AI hype to professional data scientists

Graduated students with a shining transcript and a quality dissertation find decent data scientist positions. Gives me a big smile. But then, in the job, sadly most of their clients are mere AI hype followers. Whenever I attend alum gathering, I get to hear tons of complaints from students about the work environment.

It sounds like a Janus-face case to me. On the one side, the company officials hires data scientists because they follow AI hype. They just don’t know how to make AI products. They want to make the same or the better AI products than competitors. The AI hype followers with money create this data scientist job market. On the other side, unfortunately the employers are even worse than failing students. They hear all kinds of AI hype, and they just believe all of them. Likely, the orders given by the employers will be far from realistic.

Had the employers had the same level knowledge in data science as me, would they have hired a team of data scientists for products that cannot be engineered? Had they known that there is no AI algorithm that can consistently win against financial markets, would they have invested to the AI engineer’s financial start-up?

I admit that there are thousands of unsung heros in this field without much consideration from the market due to the fact that they have never jumped into this hype marketing. The capacity of those teams must be the same as or even better than world class top-notch researchers. But even with them, there are things that can be done and cannot be done by AI/Data Science.

Hype can only attract ignorance.