Class 1. Introduction to deep learning

As was discussed in [COM502] Machine Learning, the introduction to deep learning begins with history of computational methods as early as 1943 where the concept of Neural Network first emerged. From the departure of regression to graph models, major building blocks of neural network, such as perceptron, XOR problem, multi-layering, SVM, and pretraining, are briefly discussed.

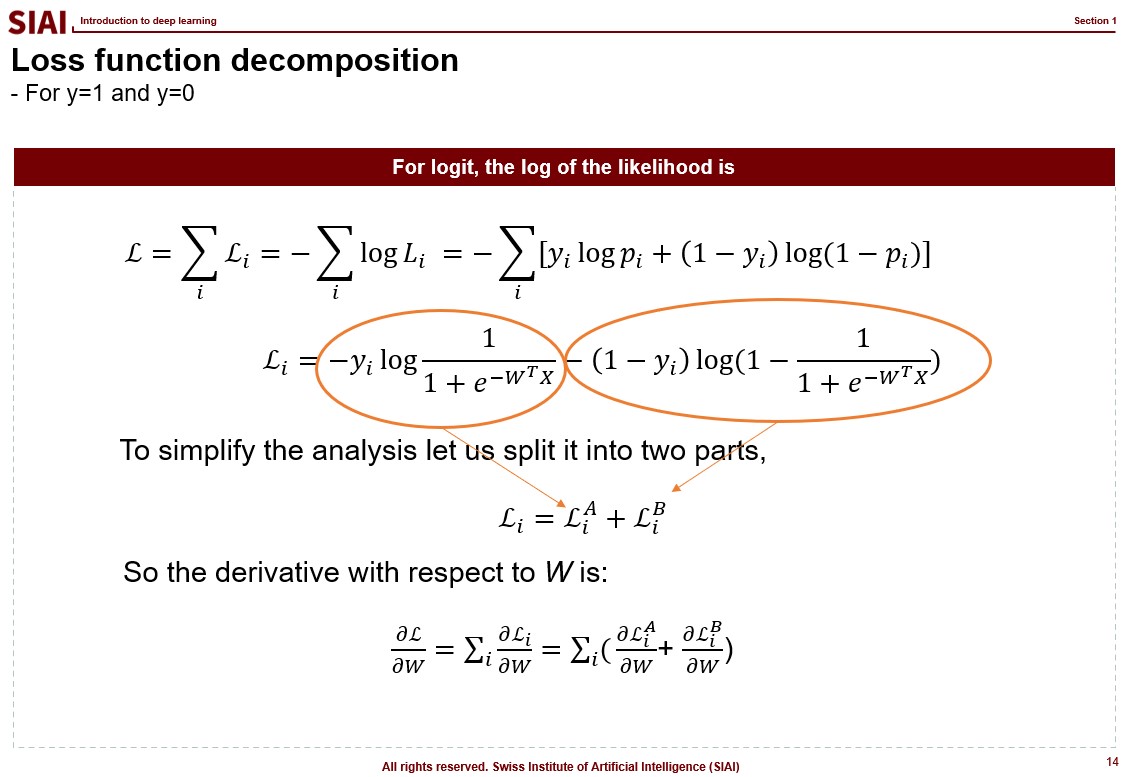

As for the intro, logistic regression is re-visited that whatever the values are in the input, the outcome is converted to only a number from 0 to 1. To find the best fit, the binary loss function is introduced in below format.

$\mathcal{L} (\beta_0, \beta_1) = \Sigma_{i} y_i \log{p_i} + (1-y_i) \log{(1-p_i)}$

The function is to minimize losses in both cases where $y=0$ and $y=1$. The limitation of the logistic regression, in fact any regression style functional format, is that without further assumptions in non-linear shape, the estimation ends up with linear form. Even if one introduces non-linear features like $X^2$, it is still limited to functional format. Support Vector Machine (SVM) departs from such limitation, but it still has to be pre-formated.

Neural Network partly solves the limitation in that it allows researchers to by-pass equational assumptions and let the function to find the best fit to the data. Though it still is dependant upon how the form of neural network is structured, what activation functions are used, and how much relevant data is fed to the network, it is a jump start from the functionally limited estimation strategies that had been pre-dominant in computational methods.

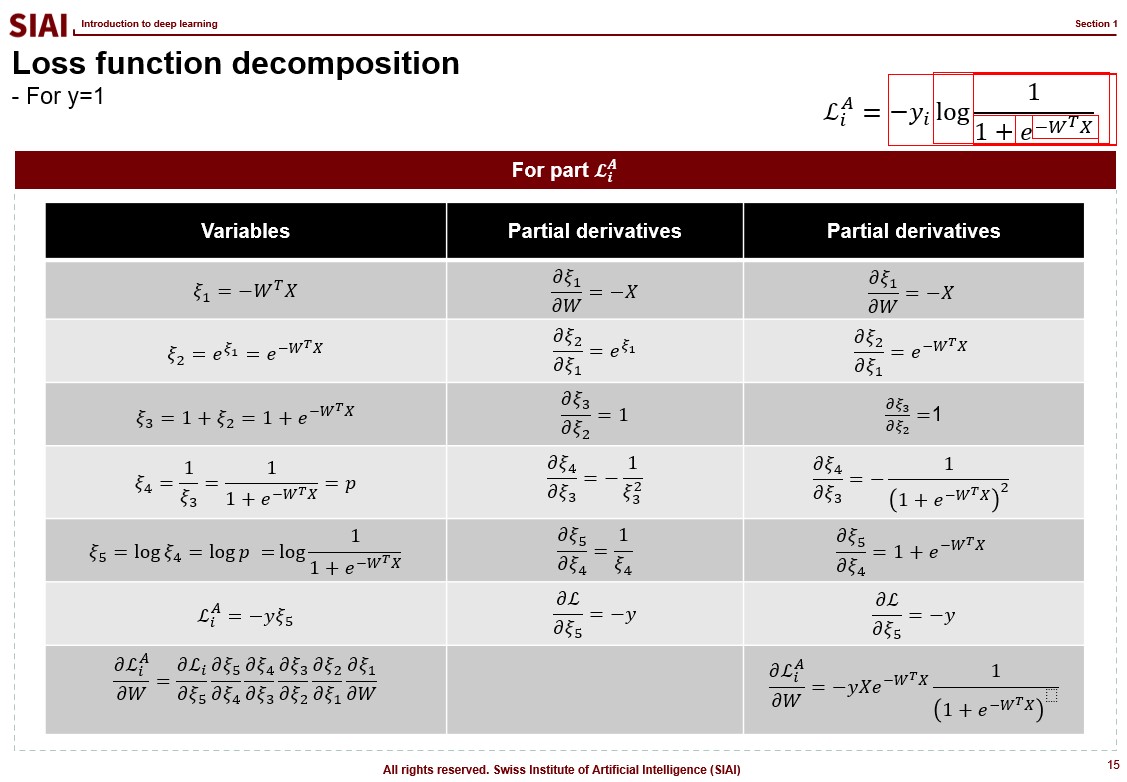

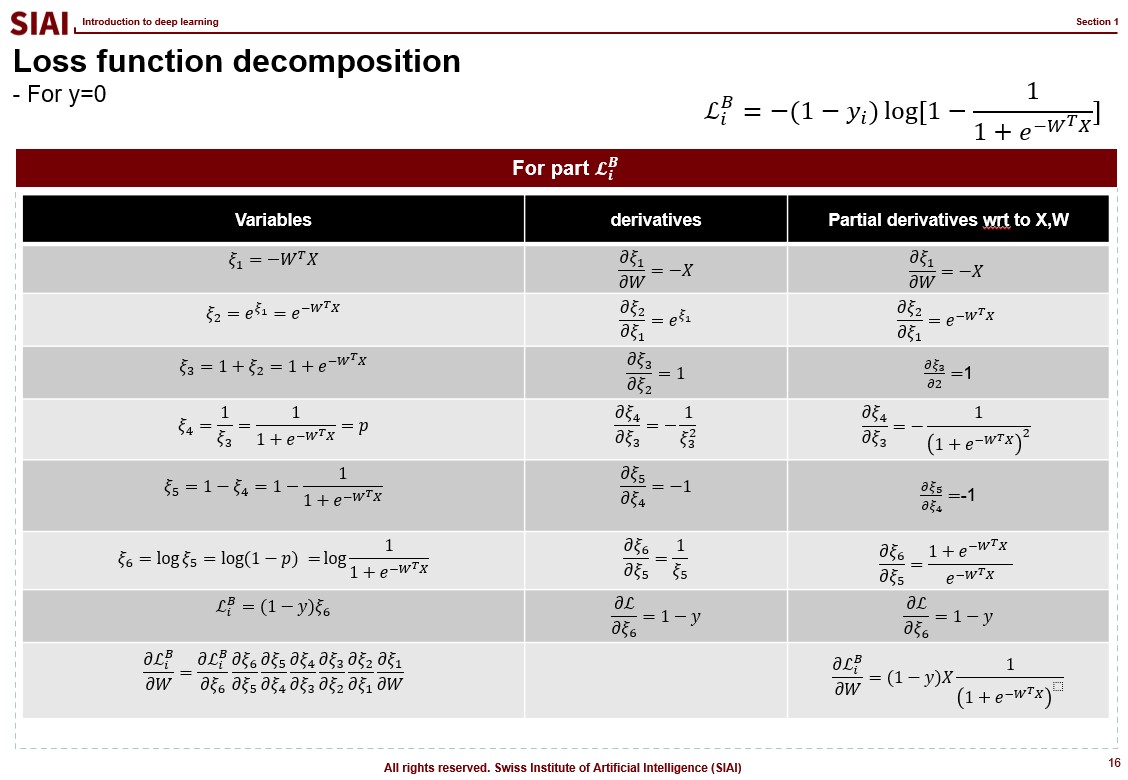

In essense, the loss functional shape is the same, but the way deep learning deal with the optimization is to leverage chain rule in partial derivative, therefore speed up the computationally heavy calculation.

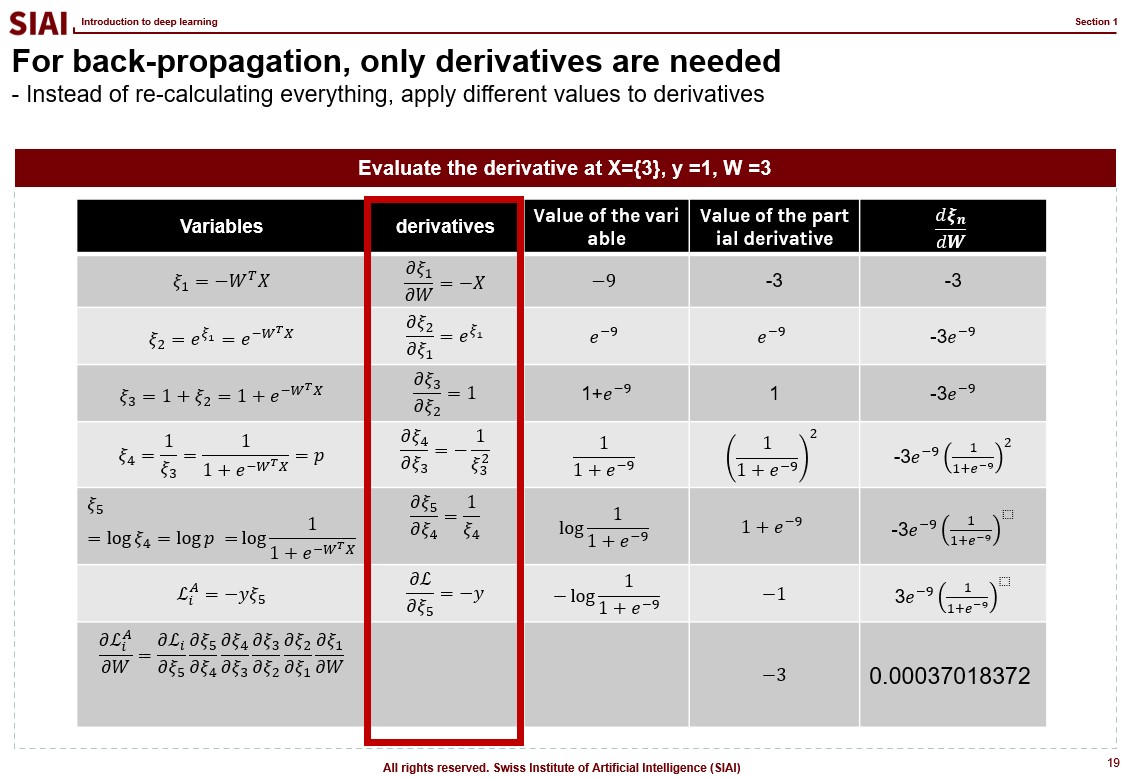

With the benefit of decomposition and chained-partial differentiation, back-propagation can achieve speed of computation that simply needs a single higher order matrix (or tensor) type transaction, instead of feeding nxk columns. An example of such is illustrated below.

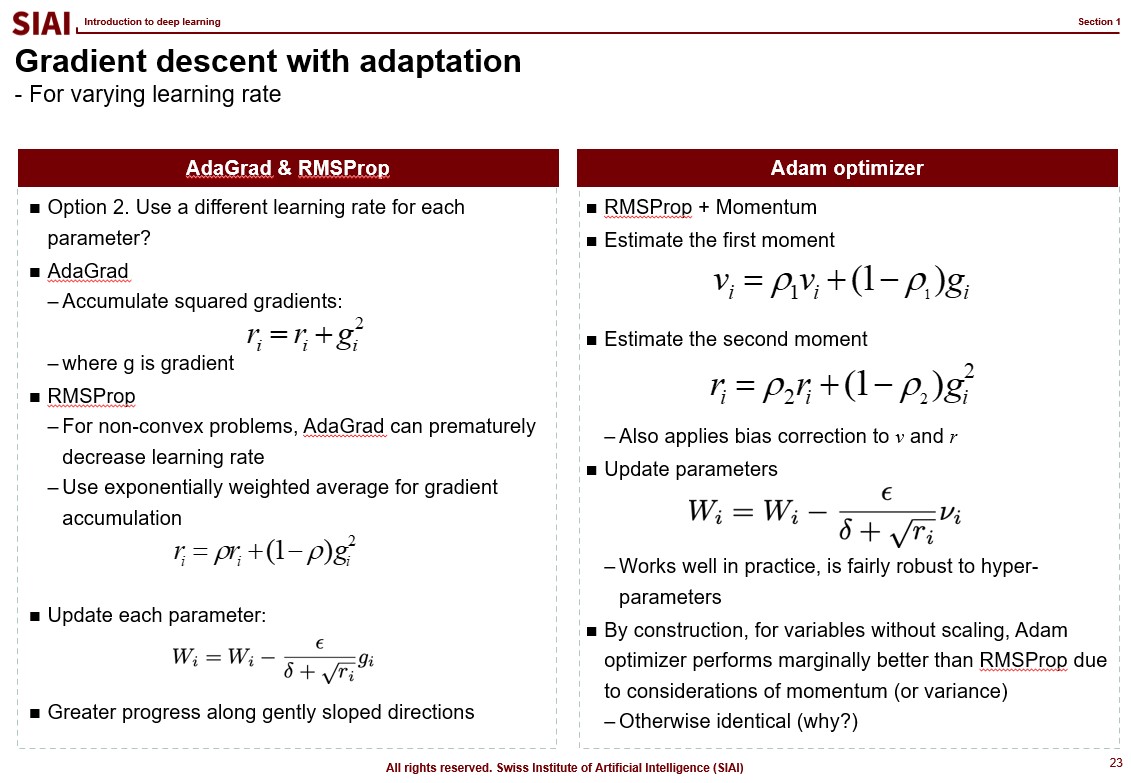

To further speed up the computation, one can modify the gradient approximation with varying weights, disproportional learning rates for vertical/horizontal angles, or even with correction by dynamically changing second moment (or variance-covariance) structure. In fact, any optimization is possible, depending on the data structure. In general, Adam optimizer performs marginally faster than RMSProp as it incorporates both first and second moments. The advantage will most likely disappear if the fed data set does not have dynamically changing variance for the reasons that second moment does not have any more information than base case.