Class 6. Recurrent Neural Network

Recurrent Neural Network (RNN) is a neural network model that uses repeated processes with certain conditions. The conditions are often termed as ‘memory’, and depending on the validity and reliance of the memory, there can be infinitely different variations of RNN. However, whatever the underlying data structure it can fit, the RNN model is simply an non-linear & multivariable extension of Kalman filter.

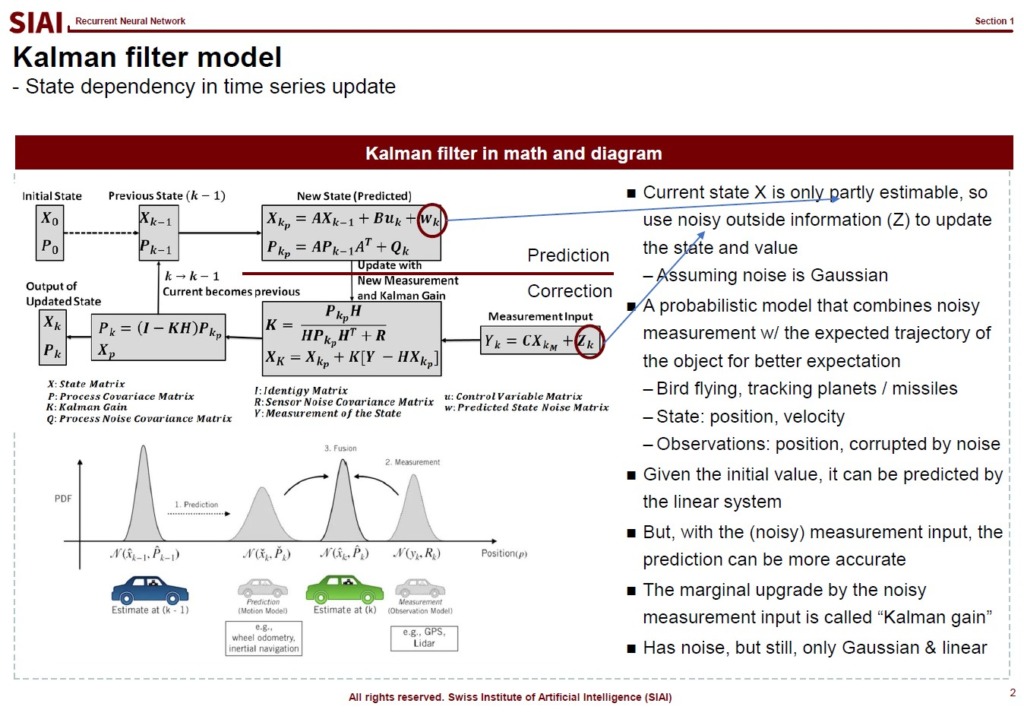

Given that NN models are just an extension of Factor Analysis for non-linear & multivariable cases with network structure, Kalman filter to RNN follows the same logic. The Kalman filter process updates previous state’s variables after an observation and potential errors. Say, one can predict a car to move from position A to B. But in reality, you maybe able to find the car in position D. The error, e=(D-B), should be used to fix the model’s next stage prediction. Assume that you give 50% weight to the error correction, because the error is not always that large. Then, the updated model will give the expected position C=B+0.5e. In other words, in every stage, previous stage’s error helps correction so that we can hopefully minimize errors in later stages.

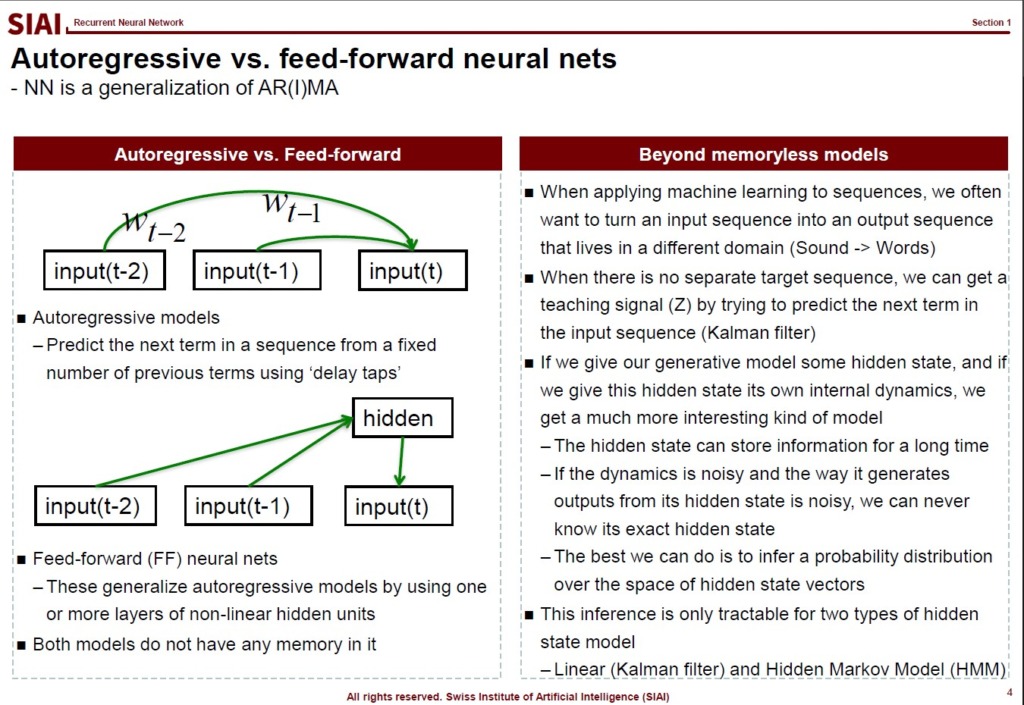

Then, we can see two aspects of RNN is just another combination of traditional stat models. From autogressive processes, we can see that memory is preserved. It does not mean that memory is completely preserved (like LSTM, a variation of RNN), but ARMA keeps memory to some distant future.

The error correction part by the state variable is similar to Kalman filter. RNN uses the state variable whether to turn on memory or skip it. When it turns on memory, depending on the choice of weights, the model reflects proportional amount of memory with some correction by the new input. In Kalman, it is called weighted error correction, like we end up with C, instead of B or D. In RNN, it is just feed forward and back propagation.

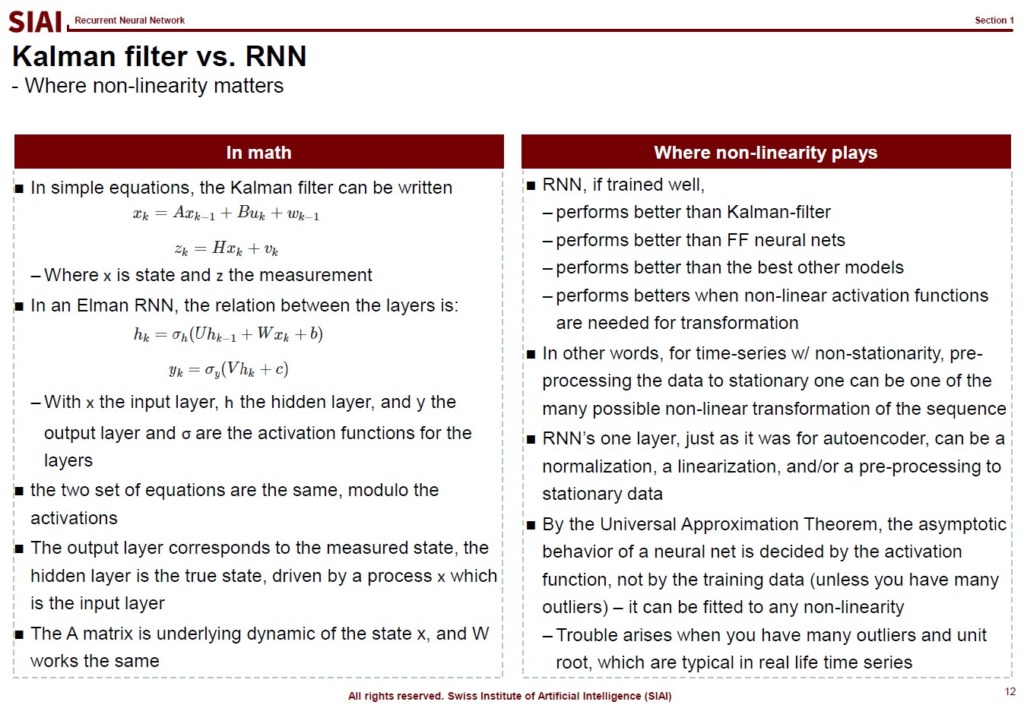

The reason RNN can perform superior to many time series processes with memory is due to its power to fit to non-linear and multivariable cases. Below is an equational form comparison between Kalman filter and RNN that illustrates the spectacular similarity in functional forms, except non-linear transformation like activation function.

Although one can construct a non-linear Kalman filter and even include more than one variable, but then VAR(Vector AutoRegressive) models are required with fixed functional form. RNN, on the other hand, relies more on data. Though the dependency to data creates similar problems that we have seen with other regular NN models, additional computational costs for certain data processes can be decently compensated for the better fit and flexibility.